Deep fakes are created using sophisticated machine learning algorithms, particularly deep learning, hence the name. These algorithms are trained on vast datasets of images and videos, allowing them to learn a person’s facial features, expressions, and vocal patterns. This learned information can then be used to realistically map those features onto another person’s face and manipulate their speech, creating a convincing illusion

Deep Fakes, erosion of the truth and public trust

Emmanuel Thomas I Thursday, April 10, 2025

LAGOS, Nigeria – The digital age has imposed upon mankind an unparalleled access to information and creative tools. However, this same technological advancement has birthed a sinister counterpart: Deep Fake Videos. These AI-generated manipulations, capable of seamlessly superimposing a person’s image and voice onto another’s actions, pose a significant threat to truth, trust, and societal stability.

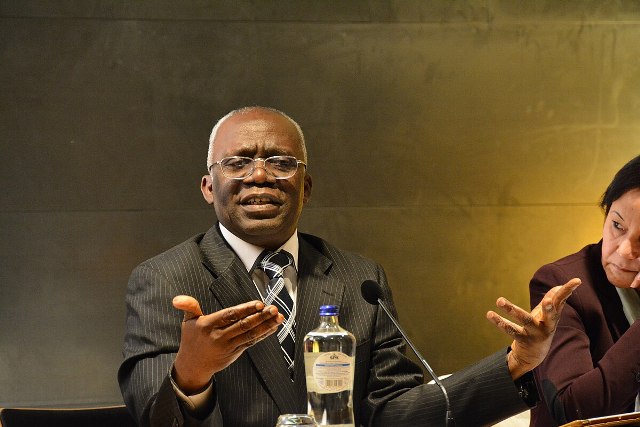

In Nigeria, deep fake videos have been created by questionable characters featuring images of prominent individuals and manipulated audio of celebrities and newscasters, like Bukola Coker, Patience Ozokwor etc and the reputable lawyer, Femi Falana SAN, and President World Trade Organisation, Dr. Ngozi Okonjo-Iweala using them as testimonials to push sales of medical drugs. These are individuals that have never been associated with any promotional campaign but deployed using Artificial Intelligence, AI to drive sales on the social media, raising question of ethics among advertising practitioners.

Deep fakes are created using sophisticated machine learning algorithms, particularly deep learning, hence the name. These algorithms are trained on vast datasets of images and videos, allowing them to learn a person’s facial features, expressions, and vocal patterns. This learned information can then be used to realistically map those features onto another person’s face and manipulate their speech, creating a convincing illusion.

The potential for misuse is alarming. While deep fakes can be used for harmless entertainment or artistic expression, their ability to spread misinformation and manipulate public perception is profoundly concerning.

The Anatomy of a Lie:

Political Manipulation: Deep fakes can fabricate speeches or actions that never occurred, damaging reputations and influencing elections. Imagine a deep fake video of a political candidate making inflammatory statements or engaging in compromising behavior. The speed at which such a video can spread online makes it difficult to counteract, even if debunked.

Reputational Damage: Individuals can be targeted with deep fakes that portray them in a negative light, leading to public humiliation, job loss, or even legal repercussions. The ease with which these videos can be created makes anyone a potential target.

Financial Fraud: Deep fakes can be used to impersonate individuals in financial transactions, leading to significant losses. For instance, a deep fake of a CEO could be used to authorize fraudulent wire transfers.

Social Engineering and Scams: Deep fakes can be used to create believable scenarios for social engineering attacks, where victims are tricked into divulging sensitive information. Imagine a deepfake video of a loved one asking for money in an emergency.

Erosion of Trust in Media: As deep fakes become more sophisticated, it becomes increasingly difficult to distinguish between real and fabricated content. This can lead to a general distrust of media, making it harder to discern truth from falsehood.

The Technological Arms Race:

The fight against deep fakes is an ongoing technological arms race. Researchers are developing detection methods that exploit the subtle inconsistencies and artifacts left behind by AI manipulation. These methods include analyzing facial movements for unnatural patterns, examining audio for inconsistencies, and using adversarial networks to identify deep fake creation techniques.

However, deep fake technology is also rapidly evolving, making detection increasingly challenging. The ability of deep fakes to adapt and overcome detection algorithms requires constant innovation and vigilance.

Beyond Technology: Societal Implications:

Addressing the deep fake threat requires more than just technological solutions. It necessitates a multi-faceted approach that includes:

Media Literacy: Educating the public about deep fakes and how to critically evaluate online content is crucial. This includes promoting media literacy programs that teach individuals how to identify signs of manipulation.

Legal Frameworks: Developing legal frameworks that address the creation and distribution of malicious deep fakes is essential. This includes laws that hold perpetrators accountable and protect victims from harm.

Industry Standards: Establishing industry standards for content authentication and provenance can help verify the authenticity of digital media. This includes developing technologies that can trace the origin of videos and images.

Ethical Considerations: Fostering ethical discussions about the responsible use of AI is crucial. This includes promoting guidelines for developers and researchers to prevent the misuse of deepfake technology.

Collaboration: International collaboration is needed to create policies, and share information to combat the spread of deepfakes.

Deepfakes represent a significant challenge to our ability to discern truth and maintain trust in information. As this technology continues to evolve, we must remain vigilant and proactive in our efforts to mitigate its potential harm. The future of truth depends on it.